What is a neural network?

A neural network is a computational system inspired by the human brain that learns to perform tasks by analyzing examples. It consists of interconnected nodes called artificial neurons organized in layers that process information and make predictions without explicit programming.

Neural networks are a type of machine learning algorithm that mimics the complex functions of the human brain. Instead of following pre-defined rules, these networks automatically learn patterns directly from data. They form the foundation of modern artificial intelligence and are revolutionizing how businesses automate complex processes.

Key Components of Neural Networks

Every neural network comprises several essential elements –

Neurons (Nodes) – The basic computational units that receive inputs and process data through mathematical functions.

Connections (Synapses) – Links between neurons that carry information, regulated by weights and biases that determine connection strength.

Weights and Biases – Parameters that control how strongly each connection influences the output. Weights represent connection strength, while biases allow neurons to activate even with weak inputs.

Activation Functions – Mathematical functions that introduce non-linearity into the network, enabling it to learn complex relationships. Common activation functions include ReLU (Rectified Linear Unit), Sigmoid, and Tanh.

Layers – Neural networks are structured in three main types of layers –

- Input Layer – Receives raw data from the outside world

- Hidden Layers – Process and transform information through multiple computational steps

- Output Layer – Produces the final prediction or classification result

How Do Neural Networks Work?

Neural networks operate through two fundamental processes that enable learning – forward propagation and backpropagation.

Forward Propagation – Processing Data Through the Network

When you input data into a neural network, it travels forward through the network in a process called forward propagation –

Linear Transformation – Each neuron receives inputs multiplied by associated weights, combined with a bias term. This creates a weighted sum –

z = w₁x₁ + w₂x₂ + … + wₙxₙ + b

Where –

- w = weights

- x = input values

- b = bias term

Activation – The result is passed through an activation function, which introduces non-linearity. This enables the network to learn complex, non-linear patterns that simple linear models cannot capture.

Output Generation – The network produces an output based on these transformations across all layers.

Backpropagation – Learning from Mistakes

After forward propagation, the network evaluates its performance through backpropagation –

Loss Calculation – The network measures the difference between its predicted output and the actual target using a loss function. Common loss functions include Mean Squared Error for regression tasks and Cross-Entropy Loss for classification.

Gradient Computation – Using calculus (specifically, the chain rule), the network calculates how much each weight contributed to the error.

Weight Update – The network adjusts weights using optimization algorithms like Stochastic Gradient Descent (SGD), moving them in the direction that reduces error.

This iterative process – forward pass, error calculation, backward pass, and weight adjustment – repeats many times over training data until the network achieves desired accuracy.

Real-World Example – Email Spam Detection

Imagine training a neural network to detect spam emails –

- Input – Email features like keywords (“prize,” “money,” “win”), sender information, and link patterns

- Hidden Layers – Process the relative importance of each feature and combine them to identify patterns

- Output – A probability score indicating whether the email is spam (0-1 range)

The network learns through training that certain word combinations are strongly associated with spam, adjusting weights accordingly until it achieves high accuracy.

Neural Network Training

Training is the process of feeding data to a neural network and adjusting its weights and biases to minimize prediction errors. Understanding different training approaches is crucial for implementing neural networks effectively.

Types of Learning Approaches

Supervised Learning – The network learns from labeled data where both inputs and correct outputs are provided. This is the most common approach for tasks like image classification and spam detection.

Unsupervised Learning – The network discovers patterns in unlabeled data without predefined categories. Used for clustering and anomaly detection.

Reinforcement Learning – The network learns through trial and error, receiving rewards or penalties for actions. Commonly used in game AI and robotics.

The Training Process – Step by Step

- Architecture Selection – Determine the number of input nodes (based on features), hidden layers, nodes per layer, and output nodes (based on prediction categories)

- Random Initialization – Weights are randomly initialized to small values near zero

- Forward Propagation – Input training data passes through the network to generate predictions

- Loss Calculation – Measure the error between predictions and actual values

- Backpropagation – Calculate gradients showing how much each weight contributed to the error

- Weight Updates – Adjust weights using gradient descent to reduce error

- Iteration – Repeat steps 3-6 multiple times across the entire training dataset

- Validation – Test the model’s performance on unseen data to ensure it generalizes well

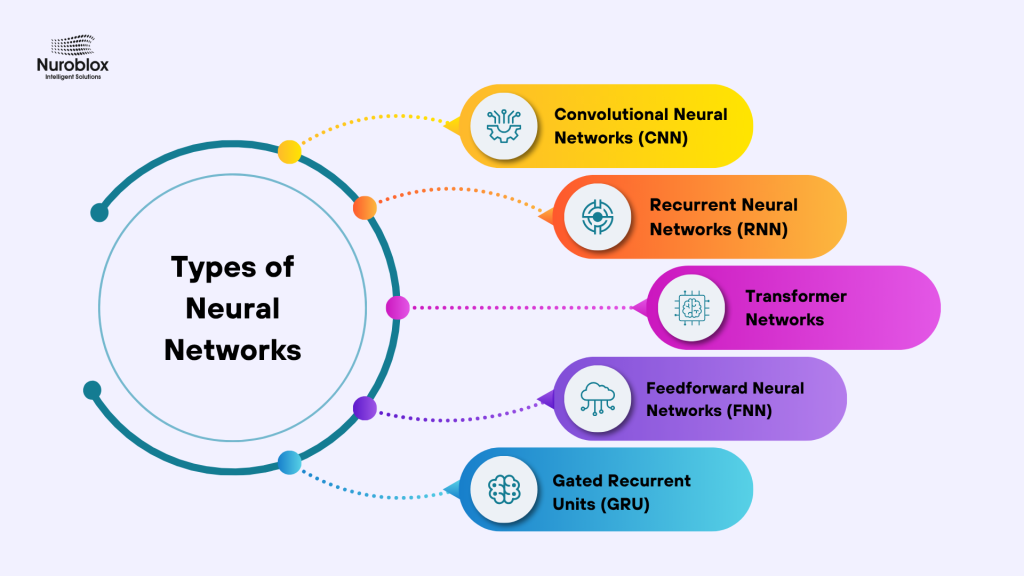

Types of Neural Networks

Different neural network architectures excel at different types of tasks. Selecting the right architecture is crucial for solving specific problems effectively.

Convolutional Neural Networks (CNN)

Specialized for processing grid-like data, particularly images. CNNs use convolutional layers with filters that slide across input data to detect patterns at different scales.

Key Features –

- Filters detect edges, textures, shapes, and higher-level features

- Significantly reduces parameters compared to fully connected networks

- Excellent at capturing spatial hierarchies in data

Real-World Applications –

- Facial recognition and face detection

- Medical image analysis (tumor detection, diabetic retinopathy)

- Object detection in autonomous vehicles

- Content moderation and image classification

Business Impact – Computer vision powers quality control in manufacturing, security systems, and medical diagnostics with human-level or better accuracy.

Recurrent Neural Networks (RNN)

Designed specifically for sequential data where current outputs depend on previous inputs. RNNs maintain internal memory through loops in their connections.

Structure –

- Each neuron retains a hidden state that captures information from previous time steps

- Connections form directed graphs along temporal sequences

- Ideal for variable-length input sequences

Challenges –

- Vanishing gradient problem – difficulty capturing long-term dependencies

- Limited parallelization due to sequential processing

Applications – Language translation, sentiment analysis, stock price prediction, weather forecasting

Transformer Networks

Revolutionary architecture using self-attention mechanisms to process entire sequences simultaneously rather than sequentially.

Breakthrough Features –

- Processes entire sequences in parallel (not step-by-step)

- Self-attention mechanism captures relationships between all sequence positions

- Scales efficiently to very long sequences

- Powers modern large language models

Current Dominance –

- Natural language processing (GPT, BERT, T5)

- Machine translation at scale

- Document summarization and text generation

- Speech processing and computer vision tasks

Advantage Over RNNs – Transformers eliminate sequential processing bottlenecks, enabling training on massive datasets and achieving state-of-the-art performance across multiple domains.

Feedforward Neural Networks (FNN)

The simplest and most fundamental architecture where data flows unidirectionally from input layer through hidden layers to output layer with no feedback loops.

Characteristics –

- Straightforward structure suitable for basic classification and regression

- Fast to train but less powerful for complex patterns

- Foundation for more advanced architectures

Use Cases – Image classification, price prediction, customer segmentation

Why Neural Networks Matter

Neural networks matter because they provide a unified computational framework for learning complex mappings directly from data. By composing layers of linear transformations and nonlinear activations, they approximate functions that capture high-dimensional dependencies far beyond the reach of traditional statistical models. This capacity to discover useful internal representations – embeddings, attention weights, feature hierarchies – underpins their success across structured and unstructured domains alike, from tabular forecasting to image and text understanding.

Their scalability is equally critical. With appropriate architectures, regularization, and optimization strategies, neural networks adapt from small prototypes to industrial-scale systems. Convolutional, recurrent, and attention-based models introduce inductive biases suited to spatial, temporal, and relational data, while normalization, residual connections, and distributed training ensure stable convergence. These design choices, coupled with advances in GPUs and data availability, have transformed neural networks from academic curiosities into production workhorses.

Ultimately, neural networks matter because they form the substrate of modern AI – flexible, differentiable systems capable of learning both discriminative and generative tasks. Whether classifying inputs or modeling full data distributions, they enable end-to-end learning pipelines driven by backpropagation. The same mathematical core now powers large language models, diffusion-based generators, and multimodal systems, demonstrating that the principles of representation learning and gradient-based optimization can scale to capture patterns at the frontier of artificial intelligence.

The Future of Neural Networks

Neural networks continue evolving with innovations in architecture design, training efficiency, and application domains. The combination of neural networks with other AI technologies (knowledge graphs, reasoning systems) promises even more powerful and interpretable AI systems.

For businesses, the trajectory is clear – neural networks are no longer experimental – they’re essential infrastructure for digital transformation, automation, and competitive success in the modern economy.

Conclusion

Neural networks represent a fundamental shift in how we approach complex problems. From their basic definition as brain-inspired computational systems to their sophisticated applications in autonomous vehicles, medical diagnosis, and business automation, these networks have become the engine of modern artificial intelligence.

Understanding neural networks is crucial for any organization pursuing digital transformation and intelligent automation. Whether you’re optimizing business processes, improving customer experiences, or detecting hidden patterns in your data, neural networks offer powerful solutions that traditional approaches cannot match.

As automation becomes increasingly important for competitive advantage, neural networks will play an ever-growing role in helping businesses work smarter, faster, and more effectively than ever before.