What is computer vision?

Computer vision is a subfield of artificial intelligence that enables computers and machines to understand, analyze, interpret, and extract meaningful information from images and videos. It combines machine learning, deep learning, and image processing techniques to train machines to replicate how humans perceive and process visual information – enabling computers to “see” and make sense of their environment.

Unlike humans who observe an entire image holistically, computer vision systems analyze images as pixel grids, breaking visual data into digestible components and using algorithms to detect patterns, edges, shapes, colors, and textures. This computational approach to visual understanding bridges the gap between human sight and machine perception, enabling automated analysis of vast volumes of visual data that would be impractical or impossible for humans to process manually.

How Computer Vision Works

Computer vision operates through a structured, multi-stage process that transforms raw visual data into meaningful insights and actionable outputs.

Image Acquisition and Preprocessing

The process begins with capturing visual data through cameras, sensors, or other imaging devices. This raw data then undergoes preprocessing to enhance quality and consistency. Images are resized to uniform dimensions, pixel values are normalized to a standard range (typically 0-255 for RGB values), and noise is removed through filtering techniques. For specific tasks like edge detection, color images may be converted to grayscale to simplify processing and focus the model on intensity changes rather than color variations.

Data Augmentation – To expand the effective size of training datasets and improve model robustness, images are artificially modified through rotation, flipping, cropping, and brightness adjustment. This technique prevents overfitting and ensures models perform well on real-world variations they haven’t seen during training.

Feature Extraction

At the heart of computer vision lies feature extraction – the process of identifying and isolating significant visual elements within images. The system scrutinizes visual data to find important features – edges where object boundaries occur, shapes that define object silhouettes, textures that provide surface characteristics, and patterns that repeat across images.

Convolutional Filters – CNNs use specialized filters that scan across image pixels, performing mathematical operations (convolution) to highlight specific features. The first layer of convolutional filters typically detects simple features like edges and simple textures. Subsequent layers build upon these simple features, detecting increasingly complex patterns – from combining edges into shapes, to recognizing parts, to identifying complete objects.

Hierarchical Feature Learning – Deep learning models automatically learn hierarchical feature representations. Lower layers learn simple, low-level features (edges, textures). Middle layers combine these into mid-level features (corners, simple shapes). Higher layers recognize high-level, semantic features (eyes, wheels, faces). This hierarchical approach mirrors how human visual perception processes information.

Model Prediction and Analysis

Trained machine learning or deep learning models analyze the extracted features, comparing them against patterns learned during training. The model performs mathematical operations on feature vectors, passing them through multiple layers of neurons where weights have been optimized to recognize important patterns.

Confidence Scoring – The model generates predictions with associated confidence scores indicating certainty. A high confidence score suggests the model is highly confident in its prediction, while low scores indicate uncertainty. Confidence thresholds can be adjusted to filter out unreliable predictions.

Bounding Box Generation – For object detection, the model generates bounding boxes specifying the precise location of detected objects within the image, typically as coordinates defining the corners or edges of rectangular regions.

Post-Processing and Output

Raw model outputs are refined into actionable results. For classification tasks, the highest-scoring class label is selected as the prediction. For detection tasks, overlapping bounding boxes from multiple detections are merged using techniques like Non-Maximum Suppression to eliminate duplicate detections.

Output Interpretation – Results are formatted for human interpretation or downstream processes—class labels with confidence scores, bounding boxes with object categories, segmentation masks showing pixel-level classifications, or other task-specific outputs. The entire process often happens in milliseconds, enabling real-time applications.

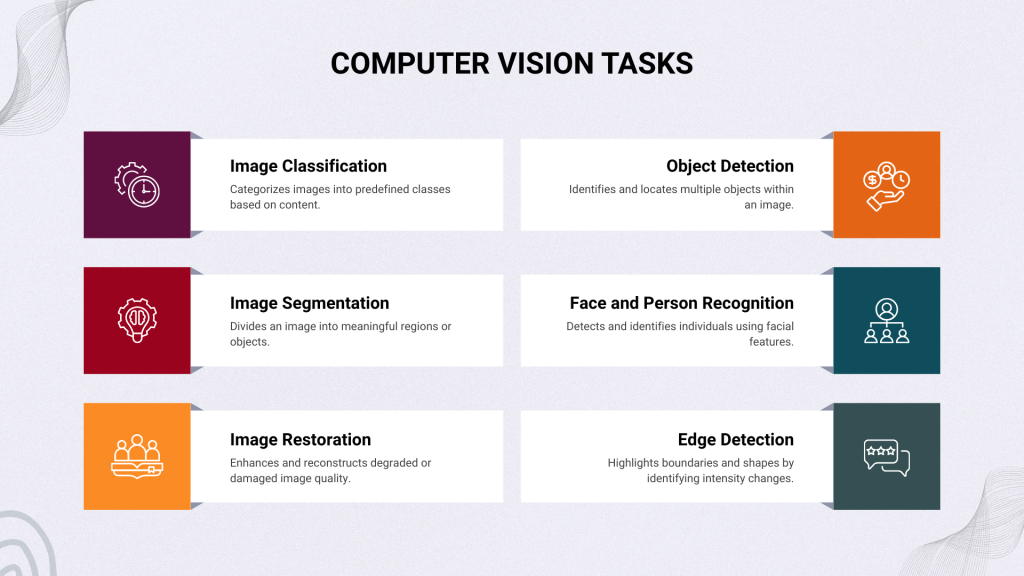

Computer Vision Tasks

Computer vision encompasses diverse task categories, each requiring specialized approaches and serving different business objectives.

Image Classification

Image classification assigns predefined labels or categories to entire images based on their content. A classifier examines an image and predicts which category or class the image belongs to. Training occurs on labeled datasets where each image is associated with one or more correct labels.

Types of Image Classification –

- Single-Label Classification – Each image receives exactly one label, answering “what is the main object in this image?” Applications include identifying whether an image contains a cat, dog, car, or other specific object.

- Multi-Label Classification – Images receive multiple labels recognizing multiple objects within a single image. For instance, an image might be labeled as containing a “cat,” “outdoor scene,” and “grass,” identifying all major elements present.

Applications – Product recognition in e-commerce, medical diagnosis from imaging, quality assessment in manufacturing, content moderation identifying inappropriate material, and accessibility features describing images for visually impaired users.

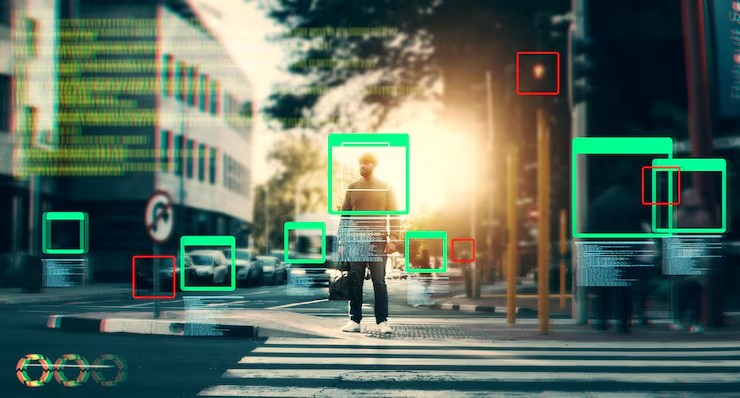

Object Detection

Object detection goes beyond simple classification by not only identifying what objects are present in an image but precisely locating them. The task combines two sub-tasks – object localization and object classification.

Detection Process – Object detection models analyze images systematically, identifying multiple objects simultaneously and generating bounding boxes rectangular regions marking object locations. These boxes include class labels and confidence scores indicating detection certainty.

Practical Applications – Autonomous vehicles detecting pedestrians, vehicles, and obstacles; retail systems monitoring shelf stock and identifying products; manufacturing quality control detecting defects and anomalies; security systems identifying suspicious individuals or activities; and medical imaging detecting tumors or abnormalities.

Image Segmentation

Image segmentation divides images into meaningful regions or segments, with each pixel assigned to a category. Unlike object detection which uses bounding boxes, segmentation provides pixel-level precision identifying exactly which pixels belong to which objects.

Segmentation Types –

- Semantic Segmentation – All pixels of the same object class receive the same label, but different instances of the same class aren’t distinguished.

- Instance Segmentation – Individual object instances are separated, enabling distinction between multiple objects of the same class.

- Panoptic Segmentation – Combines semantic and instance segmentation, treating “stuff” (background, sky) as semantic classes and “things” (people, cars) as individual instances.

Medical Applications – Isolating tumors from surrounding tissue in X-rays and MRI scans, identifying affected areas for surgical planning, measuring organ volumes, and quantifying disease progression.

Face and Person Recognition

Face recognition systems identify individuals from facial images by analyzing distinctive facial features and comparing them against known faces. Person recognition extends this to identifying individuals based on full-body characteristics, clothing, and movement patterns.

Advanced Capabilities –

- Facial Recognition – Identifying specific individuals, verifying identity claims, and enabling access control systems.

- Expression Recognition – Analyzing facial expressions to detect emotions (happiness, anger, confusion, surprise).

- Age and Gender Estimation – Predicting demographic attributes from facial features.

- Ethnicity Classification – Identifying ethnic characteristics (though with significant ethical concerns).

Applications – Security systems and surveillance identifying individuals in real-time, access control systems for secure facilities, photo organization and search in social media, emotional analysis in retail environments, and personalized marketing based on demographic inference.

Image Restoration

Image restoration improves degraded or corrupted images, removing noise and distortions introduced during capture or transmission. Enhancement techniques adjust contrast, brightness, and color to improve image quality or emphasize particular features.

Restoration Techniques –

- Denoising – Removing random noise added during image capture or transmission.

- Deblurring – Reversing motion blur or camera focus errors.

- Inpainting – Reconstructing missing or corrupted image regions.

- Super-Resolution – Increasing image resolution and detail from low-resolution inputs.

Applications – Medical imaging improving diagnostic image quality, satellite imagery enhancement for land surveying, photo restoration recovering old or damaged photographs, and video enhancement for surveillance footage analysis.

Edge Detection

Edge detection identifies sharp transitions in image intensity, which often correspond to object boundaries and important features. This foundational technique helps systems understand object shapes and structures.

Computer Vision Tools and Frameworks

Modern computer vision development relies on powerful frameworks and libraries that accelerate development and deployment.

OpenCV

OpenCV is the most popular open-source computer vision library offering over 2,500 algorithms for image processing, feature detection, and object tracking. Known for its speed and cross-platform support, it runs efficiently on devices from desktops to mobile. Its comprehensive algorithms make it ideal for real-time applications and preprocessing tasks. OpenCV is often paired with deep learning frameworks for enhanced performance and accuracy.

TensorFlow

TensorFlow, developed by Google, is a powerful open-source framework for building and deploying deep learning models. With GPU and TPU acceleration, it supports large-scale training and production deployment. The integrated Keras API simplifies model creation while maintaining flexibility for advanced customization. TensorFlow is best for end-to-end pipelines, transfer learning, and robust production applications.

PyTorch

PyTorch, created by Meta, is favored by researchers for its intuitive, dynamic computation graphs that enable rapid experimentation. Its Pythonic design allows easy debugging and flexible model development. A thriving open-source community contributes cutting-edge models and tools. PyTorch excels in research, academic projects, and increasingly in production as deployment tools evolve.

YOLO (You Only Look Once)

YOLO is a real-time object detection framework that predicts bounding boxes and class probabilities in a single forward pass. It delivers high-speed detection without compromising accuracy, making it ideal for time-sensitive applications. Pre-trained models on large datasets enable quick deployment. Common uses include autonomous vehicles, surveillance, and retail analytics.

Computer Vision Applications

Computer vision transforms industries by automating visual analysis tasks previously requiring human expertise, improving accuracy and efficiency while enabling new capabilities.

Healthcare and Medical Imaging

Computer vision revolutionizes healthcare by analyzing medical images such as X-rays, CT scans, and MRIs to detect diseases like cancer and fractures with high accuracy. It assists surgeons through real-time visualization, improving precision and reducing recovery times. Vision-based monitoring systems track patient movements, detect distress, and prevent falls. These applications enhance diagnostics, patient safety, and operational efficiency in medical environments.

Autonomous Vehicles and Transportation

Computer vision is the foundation of autonomous driving, enabling vehicles to recognize lanes, traffic signals, pedestrians, and obstacles. ADAS features like automatic braking and lane departure alerts rely on real-time image analysis to prevent accidents. Fleet management systems use vision to monitor driver behavior, detect fatigue, and record incidents. These innovations improve road safety, efficiency, and the overall driving experience.

Retail and E-Commerce

In retail, computer vision powers inventory monitoring, checkout-free shopping, and customer behavior analysis. Smart shelves track stock levels and misplaced items automatically, while systems like Amazon Go enable seamless, cashier-less purchases. Vision analytics reveal shopping patterns, optimizing store layout and product placement. It also supports personalized recommendations, improving engagement and boosting sales.

Agriculture and Farming

Computer vision enhances agriculture through drone-based crop monitoring, identifying pests, diseases, and nutrient deficiencies. It supports precision farming by optimizing irrigation, fertilization, and pesticide use for specific field areas. Automated harvesting systems use vision to identify ripe crops and guide robotic equipment. These applications increase yield, cut waste, and improve resource efficiency.

Conclusion

Computer vision enables machines to analyze visual information with speed, accuracy, and consistency exceeding human capabilities in many tasks. From detecting life-threatening diseases in medical scans to guiding autonomous vehicles safely through complex environments, from monitoring agricultural crops to ensuring manufacturing quality, computer vision is transforming how organizations operate and solve problems.

As algorithms improve, hardware accelerates, and datasets expand, computer vision applications will continue multiplying across industries. Organizations not yet implementing computer vision are falling behind competitors who have already gained efficiency improvements, cost reductions, and new capabilities. For businesses pursuing digital transformation and intelligent automation, computer vision is no longer optional – it’s essential infrastructure for competitive advantage.